Combining a number of audio tracks to a single audio master file

Mixing a Music session

- In Audacity open and listen to the file OriginalJingleMix.wav. How many different types of sound can you hear? Can you name the instruments or other sound sources?

I heard: drums, bass, keyboard, guitar these play all play a riff throughout the music.

I also heard vocals, a gong and a Hammond organ that you would hear in soul music. - Session musicians recorded each of these tracks. Listen to each instrument track individually. Make notes on the quality of each sound. Quality of sound is very subjective. Use any adjective that you feel is appropriate to describe each sound. eg Does the Bass sound smooth or punchy? Is the guitar jangly or distorted? etc… How loud does each track sound in relation to one-another?

TimpAndPiano- For the first half you hear timpani drums stuck twice loudly followed by a pause then they are struck 3 times you can hear the echo as if they are being recorded in a large hall. For the second half a low pitch piano riff plays there are 2 notes, then a pause followed by 4 notes, the last note is played 2 more times quietly as the music fades out.

SweepBass- The first half of the bassline is a 3 note repetition, the last half of it is a repetition of one note plucked so heavily we hear the vibration of it as an echo, this whole bassline is heavily distorted making it sound punchy.

Rickenbacker Bass Guitar- A series of 2 note repetitions followed by muted plucks followed by 3 note repetitions, their is a brief solo at the end followed by one notes echo then another note is played and we hear its echo. This bassline is much less distorted than the SweepBass making it sound more smooth.

FunkGuitar- This funk guitar is playing very high chords with occasional muted chords and is distorted. The piece ends with the echo of the final chord. The guitar is very jangly making it sound like 80s surfer bands to me. The volume of the track is quieter than the basslines.

Drums1- The drum beat makes use of a symbol, bass pedal and snare drum. The loudest and clearest of any instrument so far which is important since each instrument probably follows the drum beat. A gong is struck at the end which is surprising.

DopeOrgan- The most notable feature of the song is this organ, it doesn't start until 2 seconds in. A chord is played and held in for half a second followed by some short spiky single notes then another chord, this is repeated 3 times.

DloopandAnnouncer- The track starts with silence for 2 and a half seconds then a drum is struck loudly followed quickly by 2 lighter strikes this is repeated 3 more times, there is another pause for 7 seconds. Then the line "sounds beyond cool" is said by an announcer in an exaggerated distorted voice.

CEPVoices- The vocals don't start until 5 and a half seconds in, it is a cantata "accompanied singing" of 4 men, however, the first line is said by one man, the second by two men, the third by three men and the fourth by all four. I detect a hint of voice effects perhaps autotune. The voices all harmonize well and the track quickly fades out. - Look at each track in the time domain and frequency domain and make note of any distinct features. eg. What time does each sound event occur, loudness in dB, duration, envelope shape… What frequencies does it tend to occupy. How do these features inform your own personal impression of the sound as you hear it?

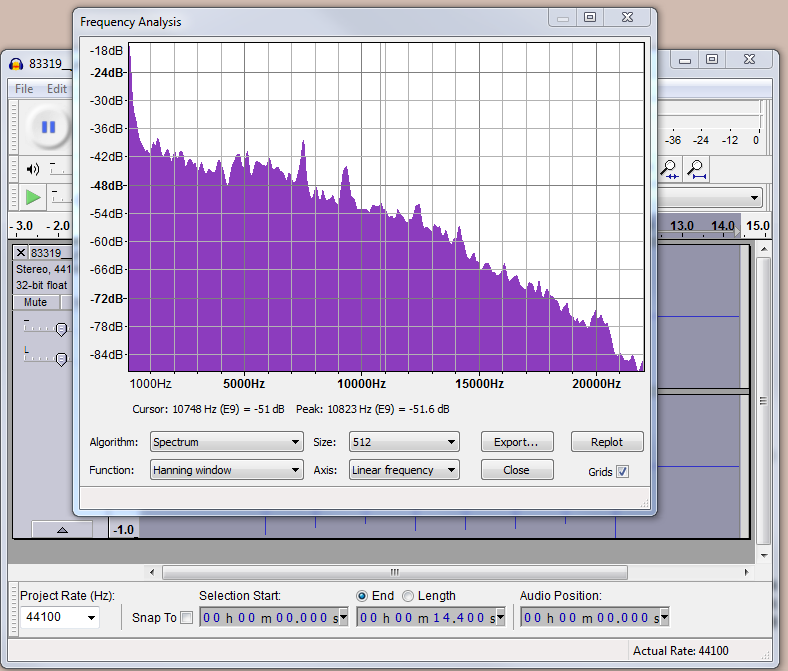

TimpAndPiano- Starts at 0 seconds and fades out around 5 seconds in. Hits a trough of -36dB and has a low pitch. Frequency caps at about 7kHz.

SweepBass- Stars at 0 seconds Hits a height of -24dB. the wave form has lots of breaks and gets lower pitched over the first half. The max frequency is roughly 5kHz.

Rickenbacker Bass Guitar- Starts at 0 seconds. Hits a height of -24dB. Their are a bunch of muted notes where there would be breaks usually. The highest frequency is 7.7kHz

FunkGuitar- Starts around half a second into the track and fades out between 11 and 14 seconds. The peak is -39dB and 7.7kHz

Drums1- Starts at 0 seconds and the gong is struck around 11 seconds in and fades out until 16 seconds. The loudest amplitude of all the tracks at -27dB and has the highest frequency of 22kHz.

DopeOrgan- Starts at 2 seconds in and ends at 11 seconds in. Each held in long note is followed by short spikes of notes. The track peaks at 36dB and about 10.8kHz

DloopandAnnouncer- The first part of the track starts at 2.5 seconds and ends at 5.5. The second part of the track starts at 12.5 seconds and fades out at 16 seconds. The drum waveform is a clear repetition 4 times, the voice waveform is unpredictable and varies in amplitude. They peak at 27dB and 21.8kHz.

CEPVoices- Starts at 5.5 seconds and fades out until 13.5 seconds. there is minimal breaks and the voices are fairly high pitch. Peaks at 24dB and 22kHz. - Use the mix feature of Audacity for each track to create the final mix for this jingle. Be sure to use control-A to select all of each wav before mixing to the waveform mix waveform. Check that the waveform sounds as expected. (It should be identical to the OriginalJingleMix track)

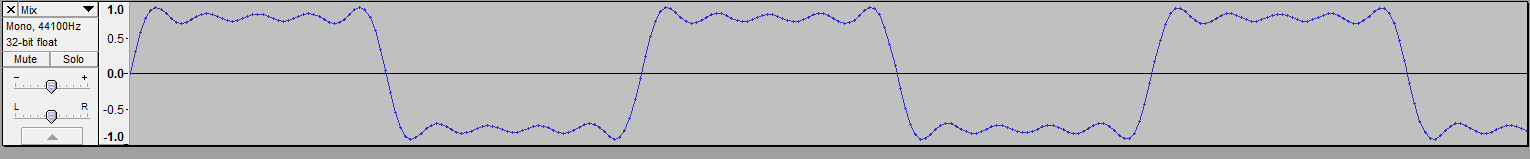

The waveform I created sounds exactly the same as the Original JungleMix track .

Creating your own version of the mix

Using the previously explored features of Audacity, modify the

component wav files before mix to produce a new style of mix…

- Make the Bass Guitar less prominent, Make the guitar sound smoother and Make the whole mix sound as if it was recorded in a large room.

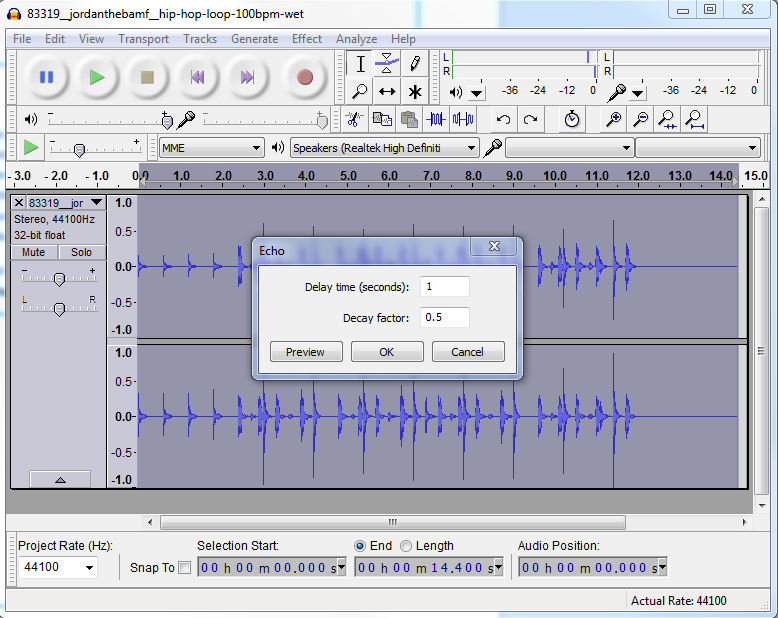

I used the equalizer effect to equalize the amplitude of the piece making the bass guitars less prominent.

I changed the frequency of the guitar to make it slightly less high pitched and it's amplitude to make it louder.For the large room recording effect I used reverb and set the room size to 100%.

Matching an audio track to video events

In the early days this

was called “Mickey mousing” where an organist in the cinema would

improvise sounds to match the events taking place in cartoons and

movies. This aimed to create a sense of drama, suspense, excitement

and surprise in the mind of the viewer. We can do the same with video

computer games or animation. Open the OldMovie file using Microsoft

Movie Maker. Watch the film many times and make a note of any events

that you consider important. When do they start when do they stop?

Are they sudden or gradual?

The music starts as the title card of the video shows and keeps playing as we see the person swing as if we are to think he was already swinging on the flying fox before we saw him.

The guitar stops suddenly at the same time the person puts his feet down to stop himself.

The guitarist resumes finger plucking as the person unharnesses himself and a chord is abruptly played as soon as he finishes.

The guitar resumes as soon as we see another person start swinging along.

One note is held in as their is a time skip kind of like an ellipsis.

Around 35 seconds in the music starts to play slowly and calmly as if the video will end soon.

At 45 seconds the final chord plays as the conclusion to the video and the ending title shows up.

The guitar stops suddenly at the same time the person puts his feet down to stop himself.

The guitarist resumes finger plucking as the person unharnesses himself and a chord is abruptly played as soon as he finishes.

The guitar resumes as soon as we see another person start swinging along.

One note is held in as their is a time skip kind of like an ellipsis.

Around 35 seconds in the music starts to play slowly and calmly as if the video will end soon.

At 45 seconds the final chord plays as the conclusion to the video and the ending title shows up.

Open-ended task

Use Wavosaur and any wavs from today’s

exercise or freesound.org to create an appropriate Mickey mouse tack

for the video being careful to match audio event times to video event

times. Once again time, effort and experimentation are key here.

When you are happy with audio track mix

it into the video and ask a fellow student to assess your final

product. (Use Microsoft Movie Maker to replace the audio track

with the one that you have created.)

Keep all of your audio and video work

from today’s session as you will be required to submit it on week

12.

I added an audience cheer whenever someone is about to complete their swing, each cheer is amplified so it gets louder every time it's played. There is a total of 4 cheers one for each swing.I did this to make the video gradually more exciting, the music is very laid back and relaxing and it contrasts with the excitement of the swinging, I added the cheers to aid in portraying the excitement the people swinging had at the time.

Here is a link to my Mickey mouse track for the video:

https://www.youtube.com/watch?v=PO3Ivj6ooY8